LLM Agents for Offensive Security

Förderjahr 2025 / Stipendium Call #20 / Stipendien ID: 7733

In today's interconnected digital world, ensuring software and systems are secure has never been more critical. A primary defense against malicious actors is performing offensive security assessments, known as penetration tests (pen-tests), which identify vulnerabilities before they can be exploited.

Alas, this vital practice is severely hampered by a pervasive challenge: a chronic and increasing global shortage of skilled cybersecurity personnel, often referred to as white-hat hackers or pen-testers. My research is focused on tackling this problem by leveraging the power of Large Language Models (LLMs) --- the technology behind modern AI --- to automate security testing with the goal of improving the efficiency and coverage of security checks.

I've started my PhD by investigating how hackers' work to better understand the problems and constraints that they are facing. In 2023, I've started to analyze the potential of using LLMs for automating hacking work, with empirical prototypes in Linux and Windows Enterprise networks. Now that I've seen that LLMs are capable of autonomously performing security tasks, I will both investigate how to improve the consistency and reliability of autonomous LLM-driven pen-testing as well as the application of LLM-techniques to interactively augment professional penetration-testers.

Uni | FH [Universität]

Themengebiet

Zielgruppe

Gesamtklassifikation

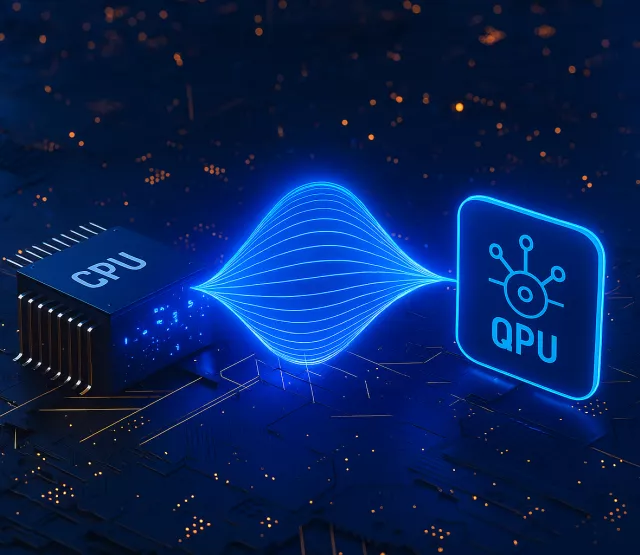

Technologie

Lizenz

Projektergebnisse

HackingBuddyGPT helps security researchers use LLMs to discover new attack vectors and save the world (or earn bug bounties) in 50 lines of code or less. In the long run, we hope to make the world a safer place by empowering security professionals to get more hacking done by using AI. The more testing they can do, the safer all of us will get.

Cochise: Can LLMs Hack Enterprise Networks?

Autonomous Assumed Breach Penetration-Testing Active Directory Networks. So basically, I use LLMs to hack Microsoft Active Directory networks.. what could possibly go wrong?

This is a prototype that I wrote to evaluate the capabilities of LLMs for performing Assumed Breach penetration-testing against Active Directory networks. I am using the GOAD testbed to provide my target environment, place a Kali Linux VM into the testbed, and then direct my Prototype cochise to hack it.