Förderjahr 2023 / Projekt Call #18 / ProjektID: 6872 / Projekt: MONITAUR

MONITAUR Unleashed

As our project reaches its final milestone, we’re excited to share its outcomes and reflect on the tools we’ve developed. In this post, we introduce a modular monitoring framework designed to help detect suspicious behavior in APIs with embedded machine learning models or other complex program logic applications. The result is a lightweight toolkit suitable for both experimentation and real-world deployment.

Why Different Monitoring

Traditional API monitoring focuses on server health, latency, and error rates, which are crucial indicators for maintaining infrastructure. However, when it comes to APIs that are build on top of a machine learning model, these indicators become insufficient for understanding the processes within application as they highly depend on the predictions of the model. The model can process complex, high-dimensional inputs (like images or text), and its outputs can vary widely depending on the context. This creates a unique challenge, as instead of logs and traces we need to look at received data itself and how the model behaves when processing it.

Unified Monitoring Toolkit

To address this gap, we have developed a flexible Python-based monitoring toolkit that can be plugged into any ML workflow. It supports multiple data modalities, such as text, tabular, and image inputs, and includes built-in logic for different types of detection:

-

Confidence-based detection: flags queries that cause low certainty or high entropy in the model predictions.

-

Repetition-based detection: catches repeated or overly similar queries that may indicate service misuse.

-

Similarity-based detection: identifies similar inputs in an embedding space with plug-and-play similarity and embedding logic.

Each detection logic is implemented in a way that makes it easy to extend or adapt to specific use cases — whether you’re a researcher working on novel techniques or a developer maintaining an ML-powered product.

Quick Start

An example below creates a default similarity-based detector for image inputs that marks them as suspicious if they similarity score is higher than 0.95:

from detectors import get_detector

from utils.query import Query

detector = get_detector("image_similarity", config={"threshold": 0.95})

query = Query(input_data=your_image)

result = detector.process(query)

print(result.is_suspicious, result.confidence, result.reason)For Researchers and Practitioners

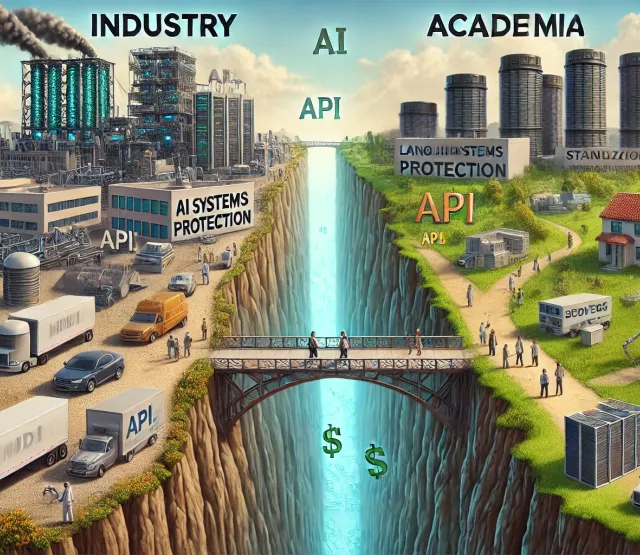

One of the advantages of our toolkit is that it is designed to work in both academic and industrial settings. For researchers, it serves as a clean and modular environment to prototype new monitoring strategies or compare existing ones. For practitioners, it offers practical tools to ensure that deployed models behave reliably in production.

Real-Time Monitoring with Prometheus and Grafana

To support real-time monitoring, we have also built an integration layer around the toolkit using Prometheus. This allows users to expose metrics like suspicious detection counts, confidence distributions, or error rates, all of which can be visualized in tools like Grafana. This makes it easy to embed ML-specific monitoring into existing DevOps setups, providing insights not just into system performance, but also into the behavior of the models themselves.

Open-Source and Ready to Use

The monitoring toolkit and its integration in the monitoring server are open-source and available on GitHub. You can find everything, including examples, usage notebooks, and deployment instructions, in the following project repositories:

Looking Ahead

We’re deeply grateful for the support and funding that made this project possible. As we wrap up this chapter, we look forward to seeing how the toolkit evolves and where it will be used — in new research, production systems, or even in directions we haven't anticipated yet!