Förderjahr 2023 / Stipendien Call #18 / ProjektID: 6794 / Projekt: Combining SHACL and Ontologies

The SHApe Constraint Language (SHACL) and the Ontology Web Language (OWL) are two popular standards introduced by the World Wide Web Consortium (W3C). But what are they, what are they good for and how do they differ?

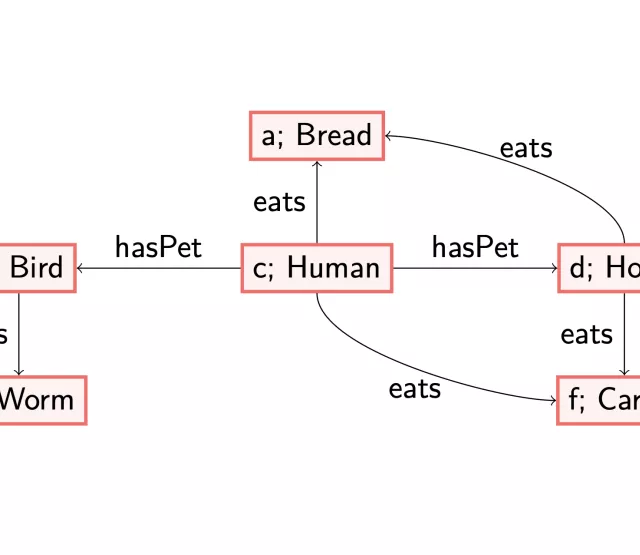

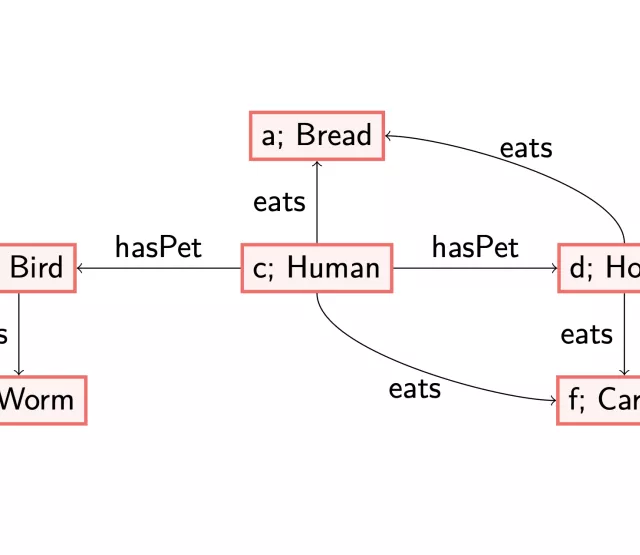

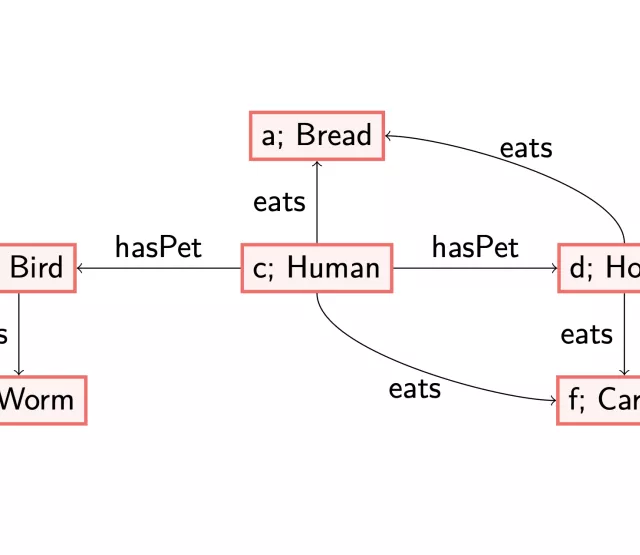

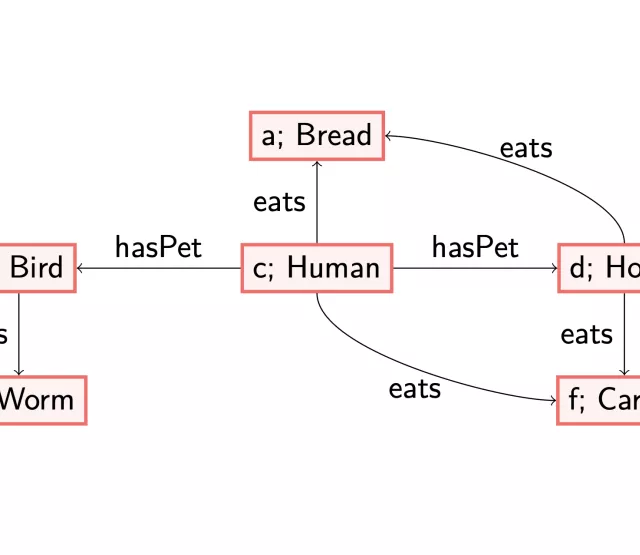

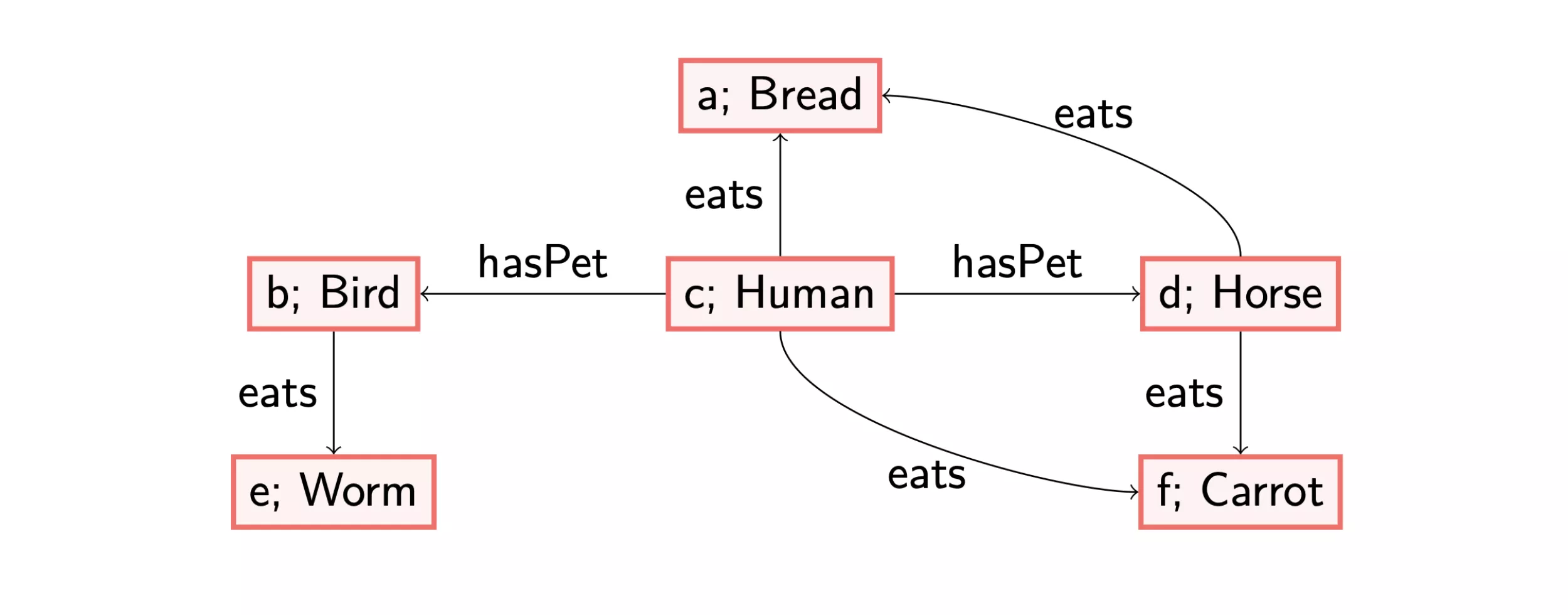

Let us start by introducing the Resource Description Framework (RDF): a graph-based model for handling data on the Web, also introduced by W3C. Currently, it is the standard way how web data is published and shared. RDF is for instance used in sharing health care information, among others by Fast Healthcare Interoperability Resources (FHIR), which helps in deciding the best possible treatment for patients. The picture accompanying this blog is a small example: we have individuals, small letters like ‘b’ or ‘c’ which are indicators (or names) of concrete objects (or humans/animals/…). Furthermore, we have relations between individuals like ‘c has a pet which is named b’ and basic concepts like ‘is a Human’. Note that such a basic concept can also be viewed as the relation ‘is a’ to an indicator indicating ‘human’.

OWL

In parallel with RDF, the W3C introduced OWL to reason about implicit knowledge, as RDF data will in general miss information. With OWL, it is possible to express ontological axioms. These axioms can be used to reason about information you do not want or cannot explicitly add to the graph. The profiles of OWL are based on Description Logics (DLs) and make the Open-World Assumption (OWA). Intuitively, this means that the given data is only a partial description of the facts; missing data can also be true.

To illustrate this, consider a graph representing a database of horses indicating how the horses relate to each other. Implicitly we have the knowledge that every horse has parents and that if an entry is some horse's parent, this is a horse too. However, for every horse, at a certain point, the database will not list the parents, maybe because the parent is not known or because it is not relevant. Clearly, this does not mean that this horse did not have any parents just because it is not indicated in the database. Adding the ontological axiom

captures this implicit knowledge.

SHACL

After its introduction, RDF was soon adopted in many applications, including, as mentioned, healthcare. Thus, making decisions on the basis of correct data became particularly critical: the W3C set SHACL as a prominent standard for validating RDF data in 2017. This is a machine-readable language — the validation task can be given to a computer — consisting of so-called “shapes” that have to be validated by a certain set of nodes of the graph, the targets. An important difference between OWL and SHACL is that SHACL makes the Closed-World Assumption (CWA). This means that we assume that all facts that are true are present in the graph.

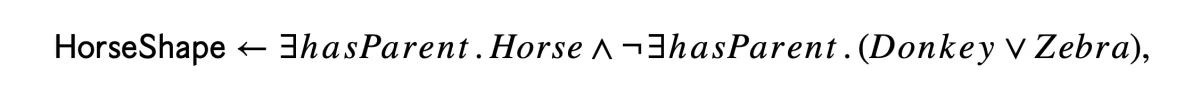

To see why we need the CWA when validating data, let us go back to the example of the horses: a way to check whether an animal is a horse, is to validate that it has at least one parent that is a horse and no parent that is a donkey or a zebra. This corresponds to the following SHACL constraint, in DL syntax,

which says that an individual has the shape of a horse when there exists a hasParent-arrow to an individual that has the basic concept ‘Horse’, and no such arrow to an individual that has the basic concept ‘Donkey’ or ‘Zebra’. We can only check whether the animal in question does not have a parent that is a donkey or a zebra if we assume that all parents of this animal are given in the graph. This is the only way to ensure that there will not be such a parent.

Thus, both SHACL and OWL are useful tools when working on data in a graph-format. However, they make different assumptions on the completeness of data and have different purposes: OWL infers knowledge, SHACL checks constraints. Now the natural next question is: what happens when we combine both features?

Anouk Michelle Oudshoorn