Förderjahr 2025 / Stipendium Call #20 / ProjektID: 7728 / Projekt: Learning in the Quantum Regime

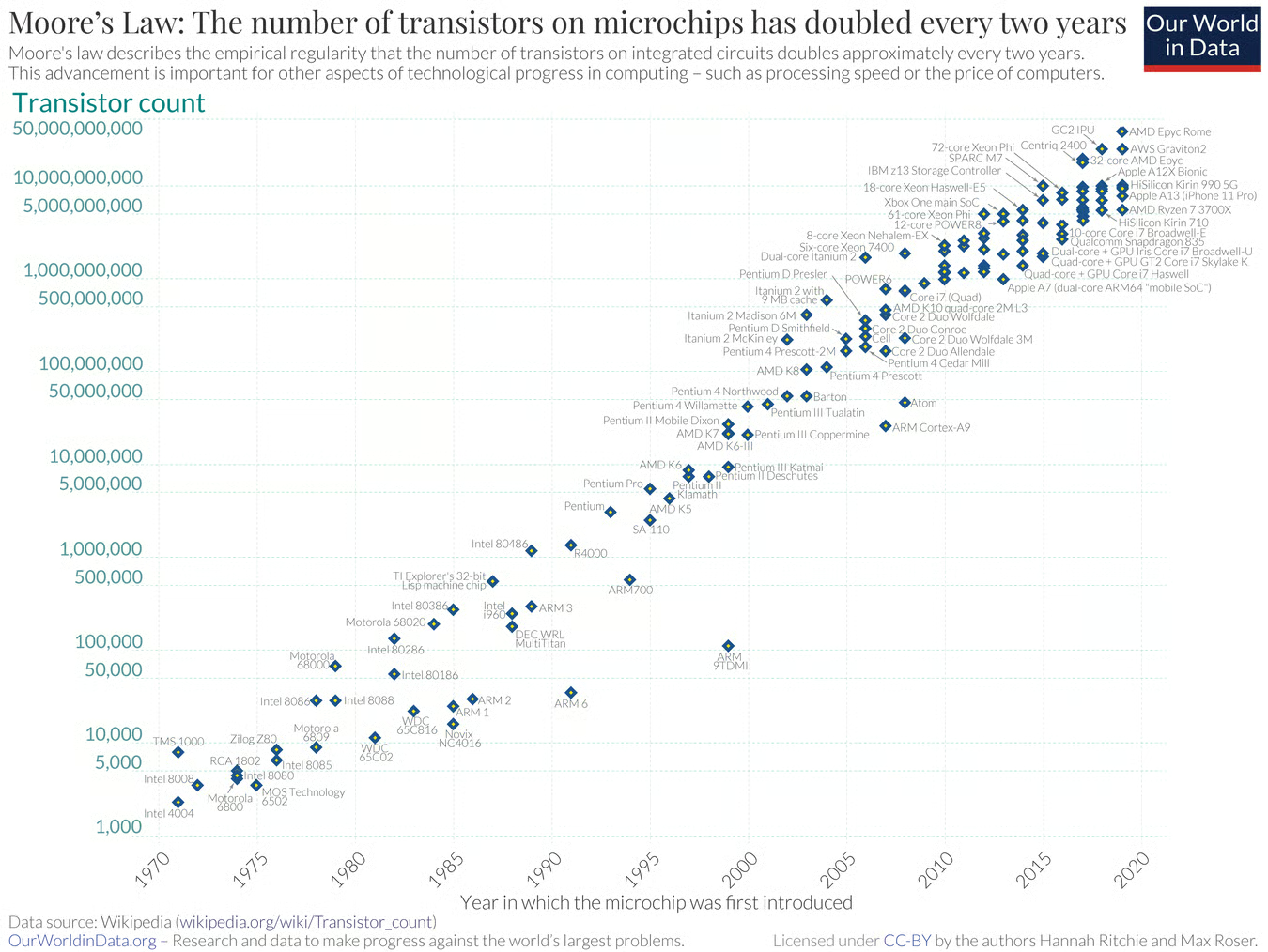

Requirements in computational workloads are ever-increasing, and the end of Moore’s law has triggered much research on alternative computing paradigms. The heterogeneity of devices poses significant challenges to modern data centers, however.

What is Moore's law, and what is its significance?

As early as 1965, Gordon Moore observed that with each new generation of computer chips, the number of transistors they could pack onto the same piece of silicon kept doubling, meaning, every few years, the computing capabilities drastically increased.

This very crude approximation has turned out to be surprisingly accurate, in fact, it held for the better part of 50 years. As a result of this exponential improvement, software kept getting faster and devices kept getting cheaper.

However, in recent years, we have seen that it has begun to slow down. As a result, simply waiting for faster hardware is no longer enough.

Today's workloads and hardware

The development in hardware over the past years, have made it an incredibly fast and useful general-purpose technology, however, we have seen a shift in computational workloads, that has, in particular, been fueled by Artificial Intelligence (AI) technologies. Modern AI works by performing billions of similar calculations at once, which is inherently what Graphics Processing Units (GPUs) excel at. While originally designed rather for computer graphics applications, the recent boost in the industry was largely due to requirements for AI applications.

Large scale neural networks, like the ones used for Large Language Models, such as ChatGPT or Gemini, require extensive computational resources - way beyond the capabilities of a standard GPU-equipped laptop - thus, the need to arrange multiple devices to bundle their capabilities, in what we call data centers or clusters, has emerged.

Beyond hardware costs, maintenance of such clusters is inherently expensive, due to concerns, such as, availability, communication, or security. This has led to a push in outsourcing computation, i.e., running algorithms in large-scale datacenters that belong to others - most notably, Microsoft or Google. These data centers have extensive computational resources and provide on-demand access and guarantees of availability. As a result, a big part of today's internet relies on these data centers.

Problems of data centers

While celebrated, data centers have an immense footprint, which significantly impacts their surroundings, through, e.g., problems with water supply. This is, in particular, due to the immense heat that is generated by the devices and modern water-cooling systems to cope with that.

Further, GPUs are inherently power-hungry, which resulted in major investments of tech companies in power generation and energy infrastructure. The massive CO2 emissions that go along with that, are a substantial problem. As an example, Meta's LLama2 models reportedly emit up to 291 tons of CO2 equivalents during training. As a comparison, the EU per-capita footprint is about 10 tons of CO2 equivalents per year.

What now?

Due to the utility of the technology, we cannot reasonably expect AI workloads to decrease soon, which is why the research community is looking into alternative computing paradigms, among others, Quantum Computing, Neuromorphic Computing, etc. (for an introduction to quantum computing, stay tuned for future blogs)

These computing paradigms are suited for specialized computations, i.e., we expect to keep classical hardware for its general purpose, but execute parts of algorithms on these specialized devices, which should be faster, more efficient or solve problems that might otherwise not be solvable at all.

This, however, requires effective integration into data centers to make the execution as efficient as possible.

Heterogeneous Datacenters

Already the large-scale integration of GPUs into data centers have made them substantially more heterogeneous, which comes with its own challenges. An example of such is communication. Should an application rely on several passes between classical and GPU nodes, fast communication between the two is required to ensure that the speed-ups from the specialized hardware are not cancelled by the additional latency.

Quantum computing comes with its own challenges, most notably, its inherent noise sensitivity leading to erroneous executions. Further, different technologies require different setups, e.g., cryogenic cooling. Thus, there is a trade-off between keeping the system as isolated as possible and ensuring fast communication.

The heterogeneity of devices is, however, also observed outside of data centers. In particular, the internet stack has evolved to include edge or fog nodes, i.e., small, potentially specialized, devices that operate geographically closer to the user. This renders the internet stack even more complex.

These developments thus bring unique and novel challenges to the research community and open exciting new research questions!

Blog Picture by Bethany Drouin from Pixabay