Förderjahr 2024 / Projekt Call #19 / ProjektID: 7207 / Projekt: HaSPI

In the late 2010s, Myanmar, a country of about 50 million people, had 18 million Facebook users. After decades of military rule, sudden internet access turned the platform into an influential news source but also a fertile ground for hate speech. Limited digital literacy met deep ethnic and religious tensions. And hate, especially against the Rohingya minority, spread fast. Facebook was unprepared: few Burmese-speaking moderators, AI unable to read local fonts, and a reporting system only available in English until 2015. Unchecked hate posts fuelled hostility. In 2017 violence escalated into a nationwide genocide (cf. Stecklow, 2018, n.p.) - a tragic reminder that content moderation must work in every language. For a summary of events see Last Week Tonight with John Oliver “Facebook” from 2018 or the BBC report ‘The country where Facebook posts whipped up hate’.

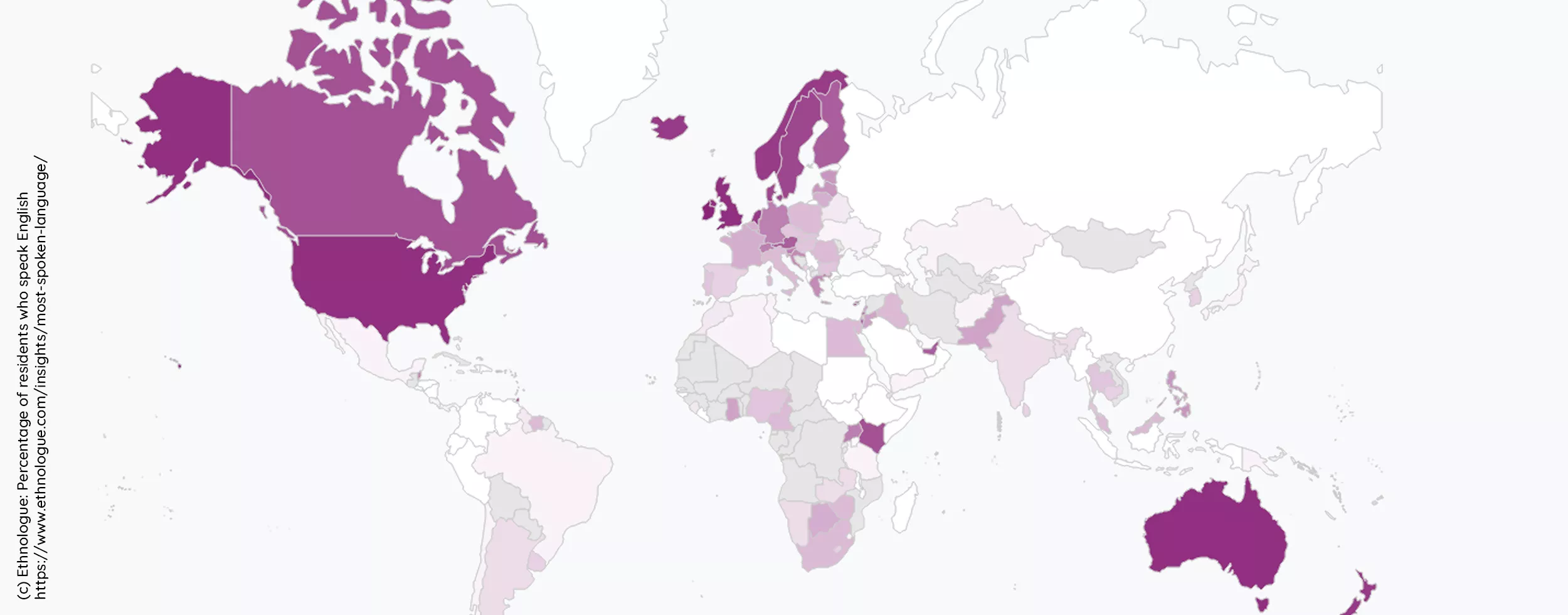

The English Advantage

English is spoken by 1.5 billion people - about 18 % of the global population (Calculating with 8.2 billion people, cf. United Nations, 2024, VII.), making it the most spoken language in the world (cf. Ethnologue, 2025, n. p.). Due to its high level of digital support it offers an abundance of resources for language models (cf. Ethnologue, n. d., n. p.). This creates various challenges when developing content moderation systems in minority language contexts.

Every Language is Unique

Every language has its own origin, culture and composition. For example, Moroccan Darija (MD) is heavily shaped by French, Berber and Spanish, meaning MD speakers often switch between languages. This is called Code Switching (CS) and can even mean switching writing direction between single sentences (cf. Aghzal, Mourhir, 2021, 267.). Hence, the structure and technical design of language models must account for the characteristics of the respective language.

Digital Inclusion is Key

Most of the world's languages are not as integrated into the digital world as English or German for example. But aspects like script encoding standards and the availability of typefaces or keyboards are essential for generating necessary data. Another vital sociopolitical factor is the access to digital devices such as computers (cf. Pava, et al., 2025, 7f.).

Where to Draw the Line?

Deciding when speech becomes harmful is difficult. It requires context understanding, identifying tone of voice or implicit meaning, detecting irony and sarcasm etc. This is already extraordinarily hard for humans, especially because it also heavily depends on their personal views, their social and cultural background (cf. Abdellaoui, et al., 2024, 2.). This is also shown in the episode “Facebook & Content Moderation” of Last Week Tonight with John Oliver from 2025.

The Data Gap: Low Resource, High Challenge

For low-resource languages representative data is scarce. Labelled and unlabelled datasets for languages like Burmese or MD are mostly poor in quality or entirely absent. Moreover, available sources can be limited in scope, as they mainly consist of religious, legal or online texts (cf. Pava, et al., 2025, 7f.). Another issue is the lack of language-specific tools, such as part-of-speech (POS) taggers or word embeddings. Consequently, researchers tend to build their own datasets, making it difficult to compare models (see for example Aghzal, Mourhir, 2021, 267.). Machine translation can sometimes supplement data but remains limited, as it fails to capture linguistic nuances or produces unnatural patterns (cf. Pava, et al., 2025, 15f.).

The Model Gap: Effective Transfer Learning

Most language models are primarily trained on English data. To bridge this gap, researchers explore different approaches ranging from monolingual applications to multilingual models like mBERT (cf. Pava, et al., 2025, 10.). These models have been pre-trained on high-resource datasets and can be fine-tuned on low-resource languages. Recent studies show promising results for this type of cross-lingual knowledge transfer, especially when there is only little training data (cf. Sai, et al., 2020, 2. & Abdellaoui et al., 2024, 4.).

Above Accuracy: Evaluation & Testing

Language models are vulnerable to adversarial attacks, meaning their reliability suffers if the input data is perturbed (cf. Goyal, et al., 2023, 1.). Therefore, standard testing metrics like accuracy are insufficient. Evaluation should consider indicators like correctness, robustness and fairness. Metamorphic testing addresses this by analysing how outputs change when inputs are altered. Adversarial data, such as inserted spaces or word substitutions, is often used for these tests. However, even well-performing models for low-resource languages must ultimately be tested in real-world applications to prove their practical suitability (cf. Abdellaoui, et al., 2024, 5f.).

Learning to Talk Global

Content moderation systems can effectively detect hate speech only if they truly understand the respective language, its nuances, and contexts. Local language competence is not a minor detail, but a crucial key to making on- and offline spaces safe - no matter where.

While German hate speech detection is well-researched on social media, it remains underexplored in newspaper forums (cf. Krejca, et al., 2025, 1.). With HaSPI, we aim to close this gap by applying an innovative imitation learning approach to enable reliable detection in this context as well gaining deeper insight into the reasons for hate speech in (Austrian) German.