Förderjahr 2024 / Projekt Call #19 / ProjektID: 7442 / Projekt: LEO Trek

The Evolving Landscape of Serverless Computing

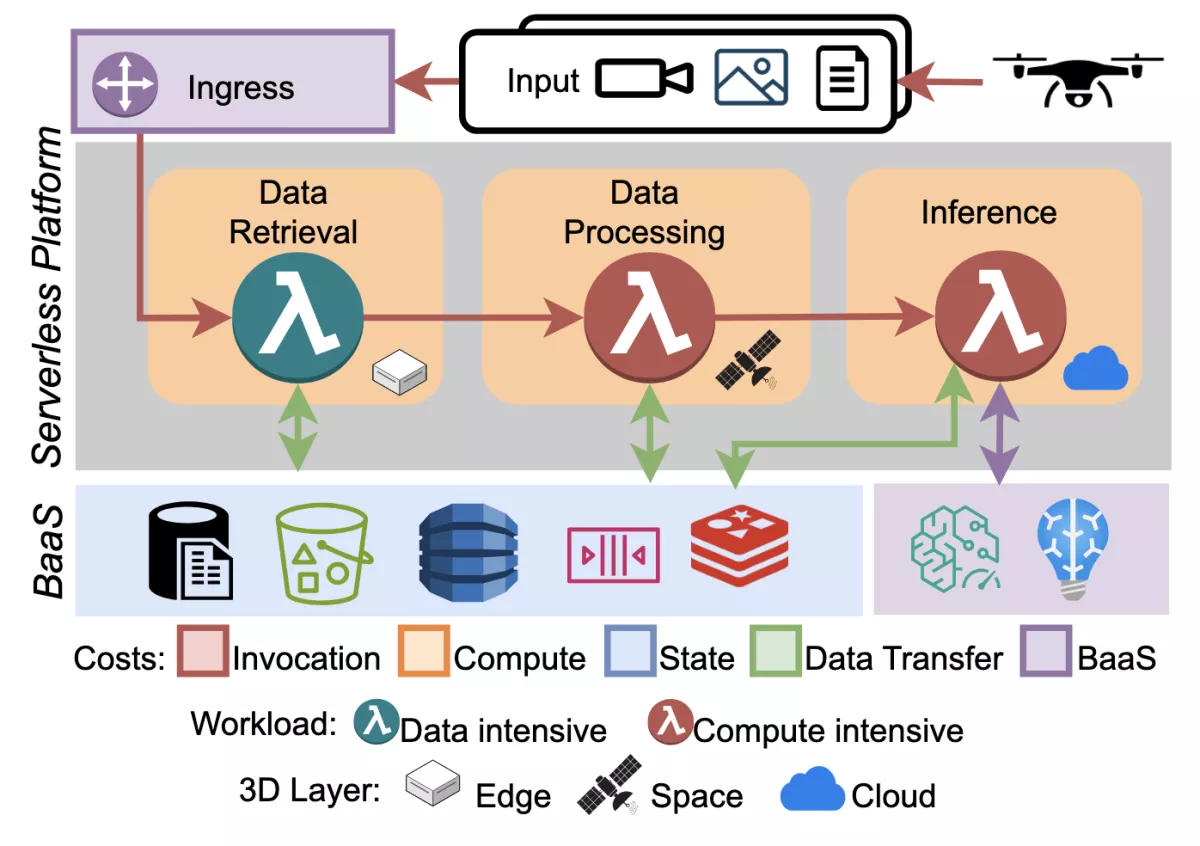

Serverless computing has become a popular paradigm for deploying elastic, event-driven applications with minimal operational overhead. Its fine-grained billing model and auto-scaling capabilities have made it the default choice for real-time data processing, IoT, and AI inference workloads. However, serverless functions are no longer confined to centralized cloud regions. Emerging infrastructures such as edge nodes and in-orbit processing aboard LEO satellites are shaping what is known as the 3D Compute Continuum. In this environment, the infrastructure layers (edge, cloud, space) offer vastly different capabilities and cost structures. However, developers and platform designers face a major blind spot: how do serverless costs behave across these heterogeneous environments? This is where Cosmos comes in.

Why the 3D Continuum Matters

To illustrate the problem, we modeled a serverless workflow that monitors deforestation in remote areas that spans all three compute layers:

- Edge: Drones collect temperature, CO₂, and imagery data.

- Space: LEO satellites preprocess data in orbit, avoiding downlinking 1.5TB/day over 300 Mbps radio links to ground stations.

- Cloud: AI inference models run on Earth using reduced datasets.

This architecture minimizes latency, reduces transmission overhead, and improves scalability, but it also introduces diverse cost drivers and complex trade-offs.

Cosmos: A Cost and Trade-off Model for 3D Serverless Workflows

Cosmos is a cost model and performance-cost trade-off framework tailored for serverless workflows deployed across the 3D Continuum. The main contributions can be summarized as:

- A cost and a performance-cost tradeoff model for serverless workflows

- A cost taxonomy that classifies the main cost drivers.

- A case study on different commercial cloud and edge providers and simulation of LEO costs

What Cosmos Offers

Cosmos identifies five main cost drivers:

-

Invocation – cost per request.

-

Compute – billed by execution time and memory.

-

Data Transfer – intra/inter-region bandwidth costs.

-

State Management – external services to persist state (e.g., DBs, KVS).

-

Backend-as-a-Service (BaaS) – AI, analytics, and other platform services.

Using this taxonomy, Cosmos models the total serverless workflow cost and introduces a performance-cost optimization model. It enables platforms to dynamically select the best layer (edge, cloud, or space) based on workload, latency goals, and budget constraints. For example, compute-heavy AI functions might favor cloud for efficiency, while preprocessing large EO satellite data might best be done in orbit.

What We Found

We evaluated Cosmos using real workloads on AWS and GCP:

-

For data-intensive tasks, data transfer and state management made up 75% of AWS costs and 52% on GCP.

-

For compute-intensive tasks like AI inference, BaaS accounted for 83% (AWS) and 97% (GCP).

-

GCP was cheaper for sustained high-volume workloads, while AWS was more cost-effective at low volumes.

Cosmos performance-cost showed that LEO satellites offer very low latency, but at an expensive cost. Cloud and edge need careful trade-off selection to meet SLOs without overspending.

What’s Next

As space-based computing becomes viable, precise cost modeling is critical. Cosmos lays the groundwork for automated scheduling and cost-aware orchestration across the continuum. Future work includes predictive placement mechanisms that match serverless function requirements to the optimal infrastructure layer, minimizing latency and cost while respecting SLOs.

The Cosmos paper was published at IEEE SMARTCOMP 2025 (https://arxiv.org/pdf/2504.20189) and is part of the LEO Trek netidee project. The open source is available at https://github.com/polaris-slo-cloud/cosmos