Förderjahr 2023 / Stipendien Call #18 / ProjektID: 6885 / Projekt: Increasing Trustworthiness of Edge AI by Adding Uncertainty Estimation to Object Detection

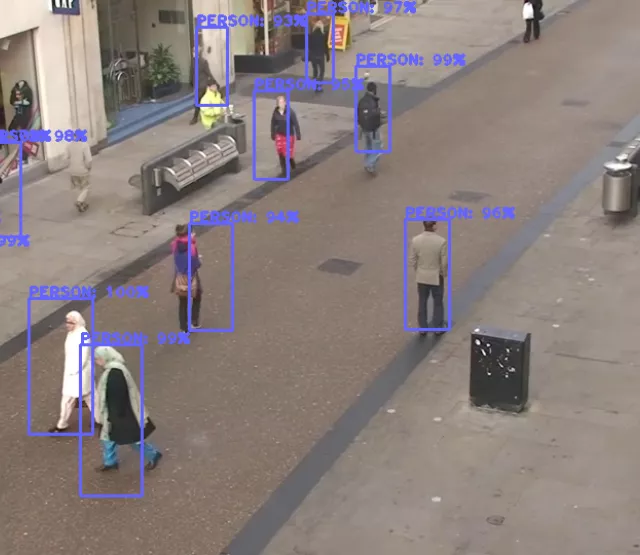

Using the YOLO11 detection framework as a starting point, various uncertainty estimation methods have been implemented and were then evaluated on several autonomous car driving datasets.

In this blog, we extend the previous evaluation with further baselines, datasets, and an additional uncertainty metric. The core strategy is to build upon the pre-trained YOLO11n ("n" stands for "nano") model, a lightweight detector suitable for fast inferences, and then add uncertainty estimation while trying to keep the performance of the base model.

Datasets

All datasets have pedestrians and vehicles as target objects to detect, with separate training and validation splits. By training on one dataset and then evaluating on the others, we can put the detector in domain-shifted settings, where it gets challenged with sceneries unfamiliar from training.

- Cityscapes [1]: We will use this dataset for training by fine-tuning YOLO11n with it. A separate split is kept for validation.

- Foggy Cityscapes [2]: The Cityscapes dataset with fog overlay increases the difficulty for detecting objects and will serve as a validation dataset.

- RainCityscapes [3]: Similar to the foggy version, rain is another weather condition challenging the models during validation.

- Kitti [4]: This dataset rounds up the validation by bringing in some additional diversity to the Cityscapes datasets.

Models

We choose a variety of models with different approaches to uncertainty estimation. Each method has individual requirements for training and additional computational needs for uncertainty estimation. All models start with the training weights of YOLO11n provided by Ultralytics [5]. We keep a pre-trained baseline without further modifications, and also fine-tune five other approaches.

- YOLO11n off-the-shelf

- Base Pretrained: Uncertainty = 1 - max. classification confidence.

- YOLO11n fine-tuned on Cityscapes with further uncertainty modifications

- Base Confidence: Uncertainty = 1 - max. classification confidence.

- Base Uncertainty: Uncertainty = Entropy of predicted class label distribution.

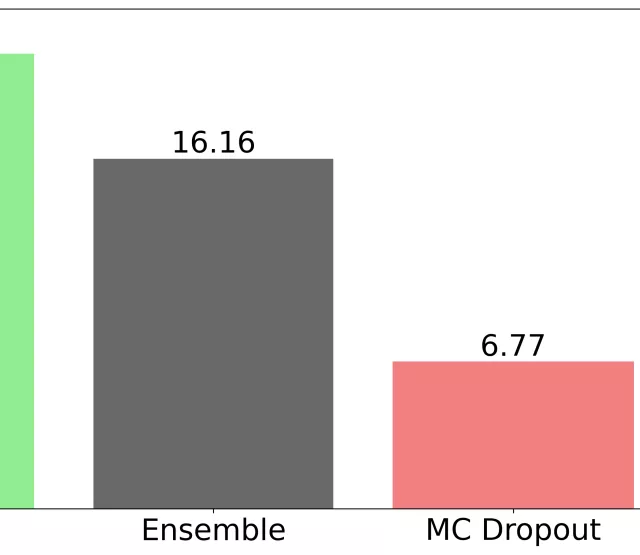

- Ensemble [6]: Uncertainty = Entropy from multiple classification heads.

- MC Dropout [7]: Uncertainty = Entropy from multiple forward passes through the classification head.

- EDL MEH [8]: Uncertainty = Extracted from predicted parameters of a prior distribution to categorical classification.

Metrics

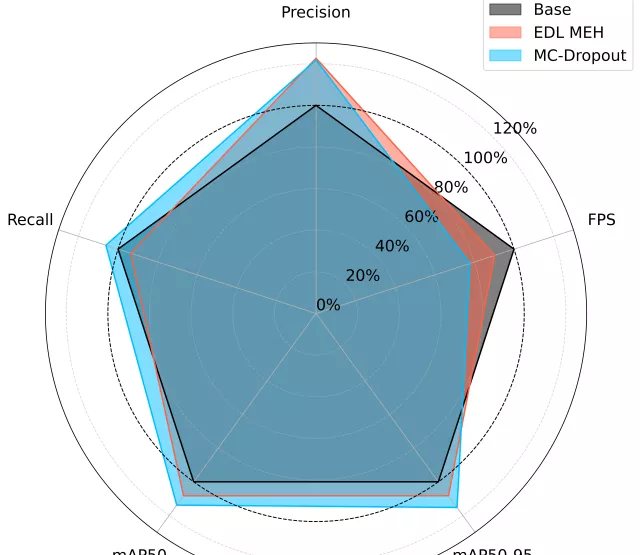

For this evaluation, two metrics will be in focus.

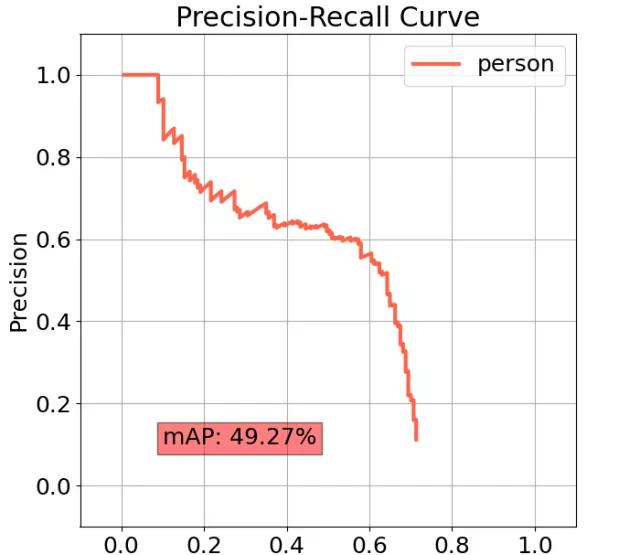

- mAP50-95: Standard object detection quality metric [9]. Higher is better. 50-95 are the thresholds of Intersection-over-Union (IoU) with true objects in percent that predictions must meet in order to be considered correct.

- mUE50-95: Minimum Uncertainty Error [10] across IoU 50–95. The plot below shows 1 − mUE50-95, so “higher is better,” aligning with mAP interpretation.

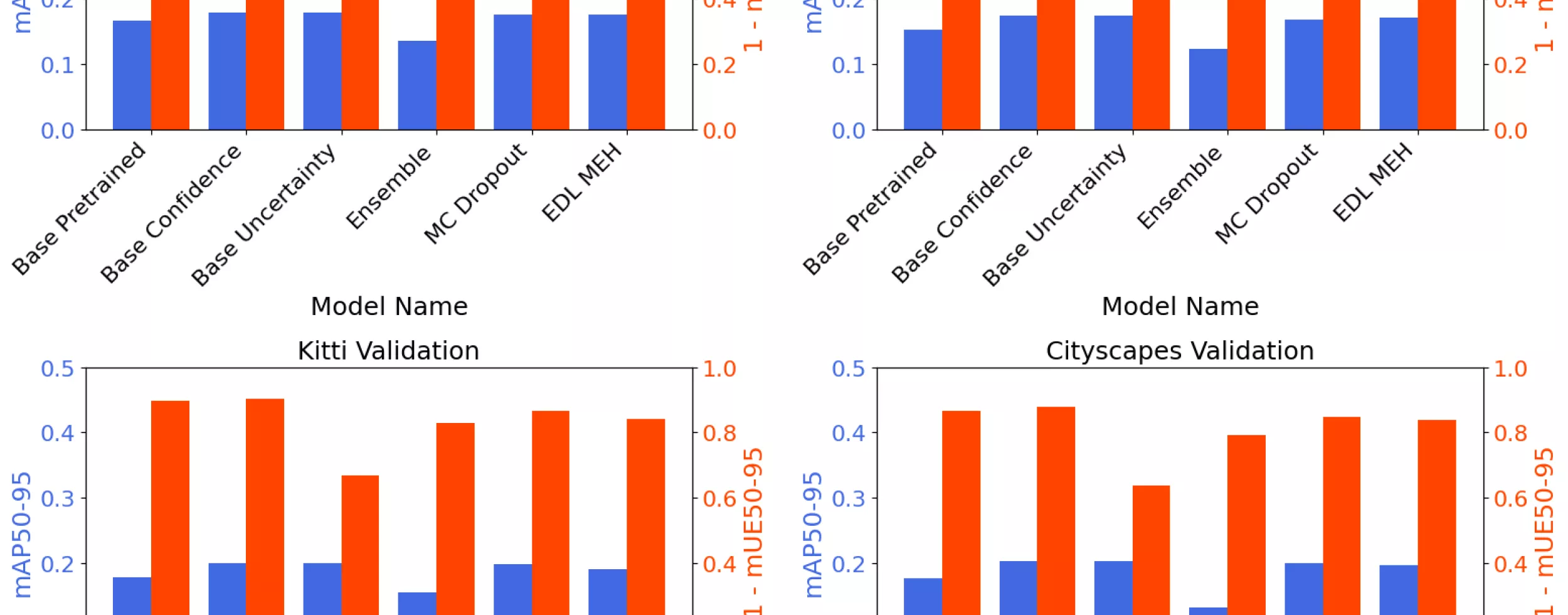

Results

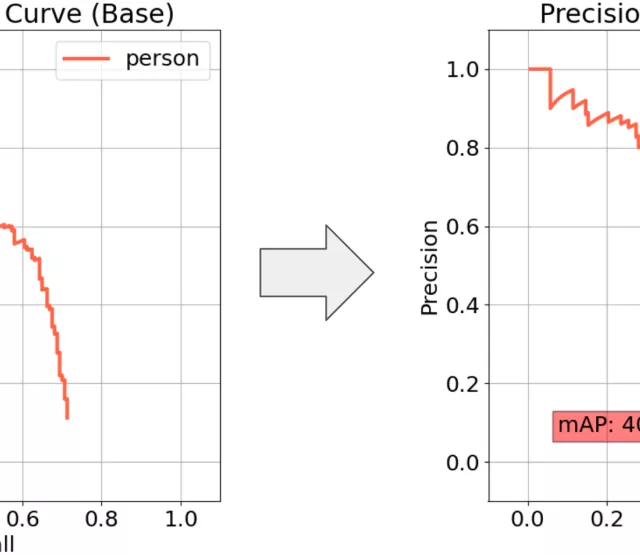

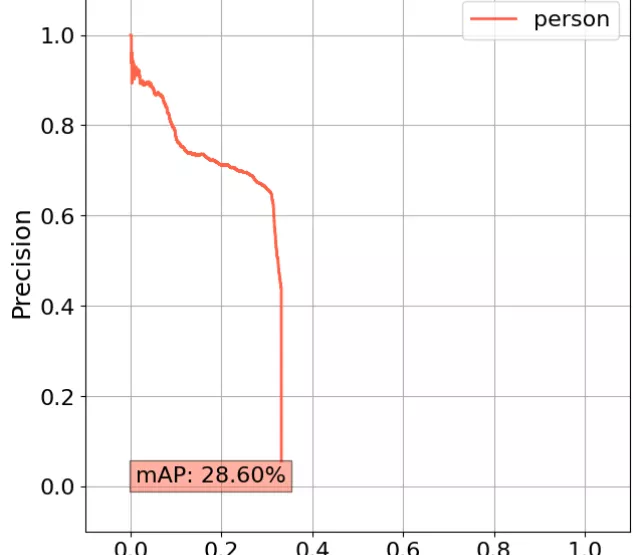

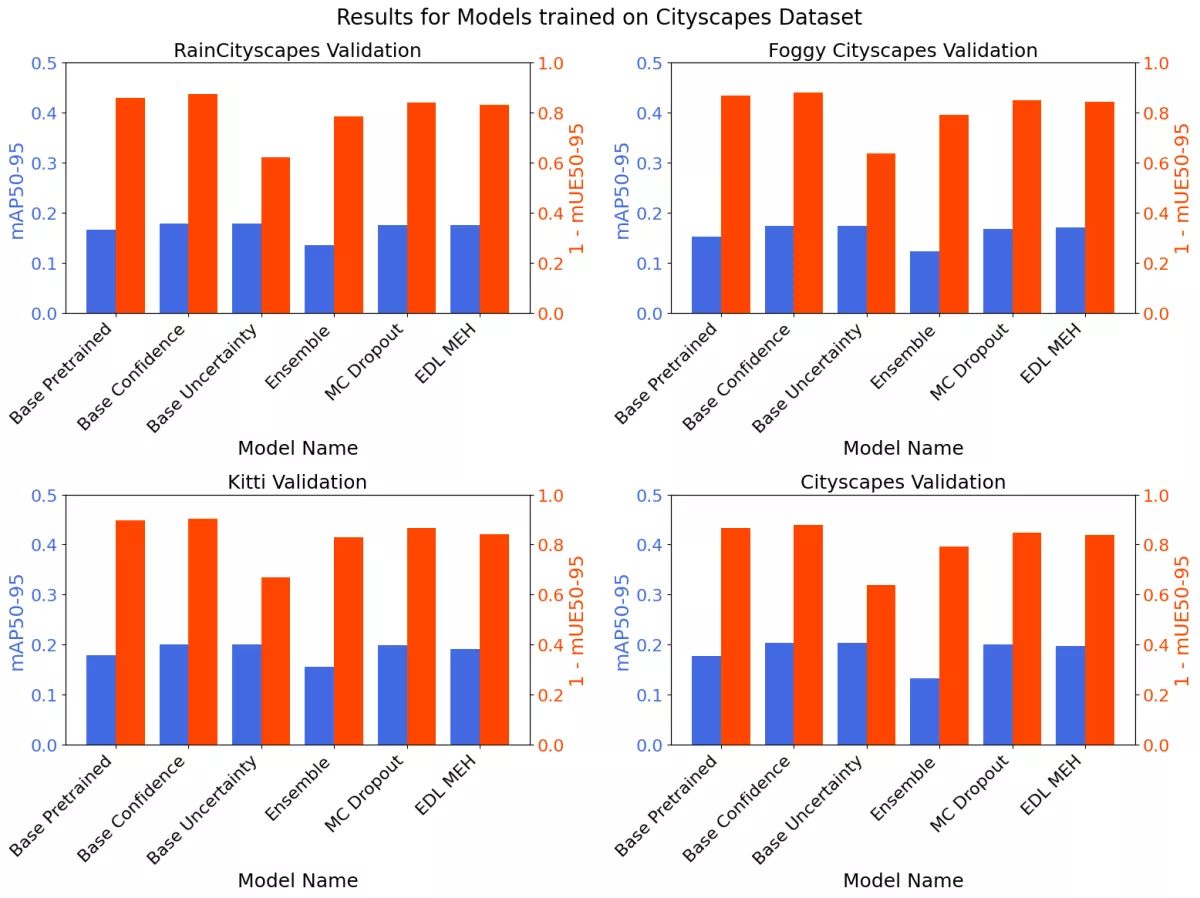

The results show relatively high mAP (blue) values for the Base Pretrained model, as well as the fine-tuned Base Confidence model. What's more interesting, they also have low uncertainty error (mUE50-95), and correspondingly high certainty (1 - mUE50-95), despite their naive approach in interpreting uncertainty just as the complement to classification confidence. Base Uncertainty using entropy of the classification vector scores performs significantly worse at estimating uncertainty. Ensemble sees the strongest decline in mAP50-95. MC Dropout and EDL MEH show good performances, but do not overall surpass the Base Confidence model.

Conclusion

In this evaluation setting (datasets and metrics), sophisticated uncertainty estimation approaches (Ensemble, MC Dropout, EDL MEH) were not able to beat a simple baseline (Base Confidence). This suggests that pre-trained baselines already come very well calibrated and therefore do not benefit as much from added uncertainty estimation methods; it may even degrade their performance. Still, there are possible ways for improvement, where one could be hyperparameter optimization of the uncertainty approaches. The next steps will focus on refining the evaluation with better-tuned models, but also additional datasets and metrics may unveil more insights.

References

[1] Marius Cordts, Mohamed Omran, Sebastian Ramos, Timo Rehfeld, Markus Enzweiler, Rodrigo Benenson, Uwe Franke, Stefan Roth, and Bernt Schiele. The Cityscapes Dataset for Semantic Urban Scene Understanding. pages 3213–3223, 2016.

[2] Christos Sakaridis, Dengxin Dai, and Luc Van Gool. Semantic Foggy Scene Understanding with Synthetic Data. International Journal of Computer Vision, 126(9):973–992, September 2018.

[3] Xiaowei Hu, Chi-Wing Fu, Lei Zhu, and Pheng-Ann Heng. 2019. Depth-Attentional Features for Single-Image Rain Removal. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 8014–8023. https://doi.org/10.1109/CVPR.2019.00821

[4] Andreas Geiger, Philip Lenz, and Raquel Urtasun. 2012. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Conference on computer vision and pattern recognition (CVPR).

[5] Glenn Jocher and Jing Qiu. Ultralytics YOLO11, 2024. tex.orcid: 0000-0001-5950-6979, 0000-0002-7603-6750, 0000-0003-3783-7069.

[6] Matias Valdenegro-Toro. 2019. Deep Sub-Ensembles for Fast Uncertainty Estimation in Image Classification. https://doi.org/10.48550/arXiv.1910.08168

[7] Sai Harsha Yelleni, Deepshikha Kumari, Srijith P.K., and Krishna Mohan C. 2024. Monte Carlo DropBlock for modeling uncertainty in object detection. Pattern Recognition 146: 110003. https://doi.org/10.1016/j.patcog.2023.110003

[8] Younghyun Park, Wonjeong Choi, Soyeong Kim, Dong-Jun Han, and Jaekyun Moon. Active learning for object detection with evidential deep learning and hierarchical uncertainty aggregation. In The eleventh international conference on learning representations, 2023.

[9] Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Doll´ar, and C. Lawrence Zitnick. Microsoft COCO: Common objects in context. In David Fleet, Tomas Pajdla, Bernt Schiele, and Tinne Tuytelaars, editors, Computer vision – ECCV 2014, pages 740–755, Cham, 2014. Springer International Publishing.

[10] Dimity Miller, Feras Dayoub, Michael Milford, and Niko Sunderhauf. 2019. Evaluating Merging Strategies for Sampling-based Uncertainty Techniques in Object Detection. In 2019 International Conference on Robotics and Automation (ICRA), 2348–2354. https://doi.org/10.1109/ICRA.2019.8793821