Förderjahr 2024 / Projekt Call #19 / ProjektID: 7442 / Projekt: LEO Trek

WebAssembly, also known as Wasm, has emerged as the future of serverless computing: a portable, lightweight, and secure runtime that operates across heterogeneous environments, ranging from edge devices to cloud nodes. However, when you compare Wasm with containers in realistic serverless setups, the actual performance trade-offs remain unclear. Therefore, we present Lumos, A performance model and open-source benchmarking tool that makes these trade-offs measurable and comparable in the Edge–Cloud Continuum.

Our main contributions are:

-

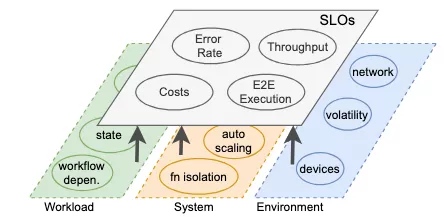

Lumos, a performance model for serverless functions that links workload, system, and environment factors to SLOs such as latency, throughput, and cost in the Edge–Cloud Continuum.

-

A modular benchmarking tool that evaluates serverless functions across multiple runtimes (containers, Wasm interpreted, Wasm ahead-of-time), workloads (compute- and data-intensive), and execution conditions (cold/warm starts, concurrency, BaaS access).

-

A performance characterization of WebAssembly as a serverless runtime, quantifying when AoT-compiled Wasm is beneficial and when containers still dominate in practice.

Lumos Performance Model and Architecture

Lumos introduces a serverless performance model that groups performance drivers into workload, system, and environment dimensions. It captures how factors such as data I/O, serialization, function isolation, autoscaling behavior, device heterogeneity, and network variability jointly impact SLOs. Architecturally, Lumos separates the user, control, and data planes, with functions and traces in the user plane, build/deploy and invocation control in the control plane, and fine-grained telemetry (CPU, memory, I/O, serialization) in the data plane. This design enables reproducible experiments across different runtimes without requiring changes to the application code.

Benchmarking WebAssembly vs Containers

Lumos benchmarks eight Rust-based serverless functions on a heterogeneous edge cluster using Knative, MicroK8s, Redis, RunC, and WasmEdge (interpreted and AoT). It shows that AoT-compiled Wasm images are up to 30× smaller and can reduce cold-start latency by up to 16% compared to containers, which is particularly relevant in bandwidth-constrained environments. At the same time, interpreted Wasm suffers from 30–55 times higher warm latency and 7–10 times higher I/O and serialization overhead, and even AoT incurs up to 2 times the overhead for data handling in many cases. Containers consistently achieve lower CPU and memory usage, as well as significantly better scalability under high concurrency, while AoT narrows but does not fully close this gap.

Lumos is implemented as a modular, open-source framework that automates build, deployment, telemetry collection, and analysis for serverless benchmarks across containers and Wasm. In future work, Lumos will be extended to real-world traces and to the 3D Compute Continuum that includes space, enabling developers and researchers to explore performance trade-offs for serverless workloads running across edge, cloud, and orbital environments without requiring real satellite deployments.

The source code of Lumos is available on GitHub and further details can be found in our paper, which was presented at the ACM IoT 2025 conference.