Förderjahr 2023 / Stipendien Call #18 / ProjektID: 6851 / Projekt: Reliability of Edge Offloadings

This blog is about modeling shared edge computing infrastructure as a queueing system for capturing multi-device workloads.

In this blog, we will cover queueing modeling of shared edge computing infrastructure under multi-device workload settings.

Motivation

The most common setting under which edge offloading occurs is where multiple mobile devices are concurrently and simultaneously offloading tasks on shared heterogeneous remote servers. To capture such a multi-device workload setting, we employ a queueing theory. The queueing theory provides a formal framework that analyzes key performance metrics of the dynamic system. In the dynamic edge offloading case, estimating performance metrics such as task response time is critical where offloaded applications can be latency-sensitive. Workload consisting of application tasks arriving from multiple mobile devices at different rates and are served by multiple servers of different capacities can be volatile and affect response time quickly. The main benefit and purpose of utilizing the queueing model is to accurately estimate task response time under a multi-device workload setting.

Queue Modelling of Edge Offloading

In general, every queue has three elements that are the input arrival process, the waiting process, and the output service process. The input arrival process captures the task arrival rate in the queue. The waiting process captures the task waiting in the queue of finite size (a.k.a. queue length) to be served while the service process executes tasks. The aforementioned processes are important to realistically simulate and estimate the queueing latency that offloaded tasks will experience if being offloaded through that queue.

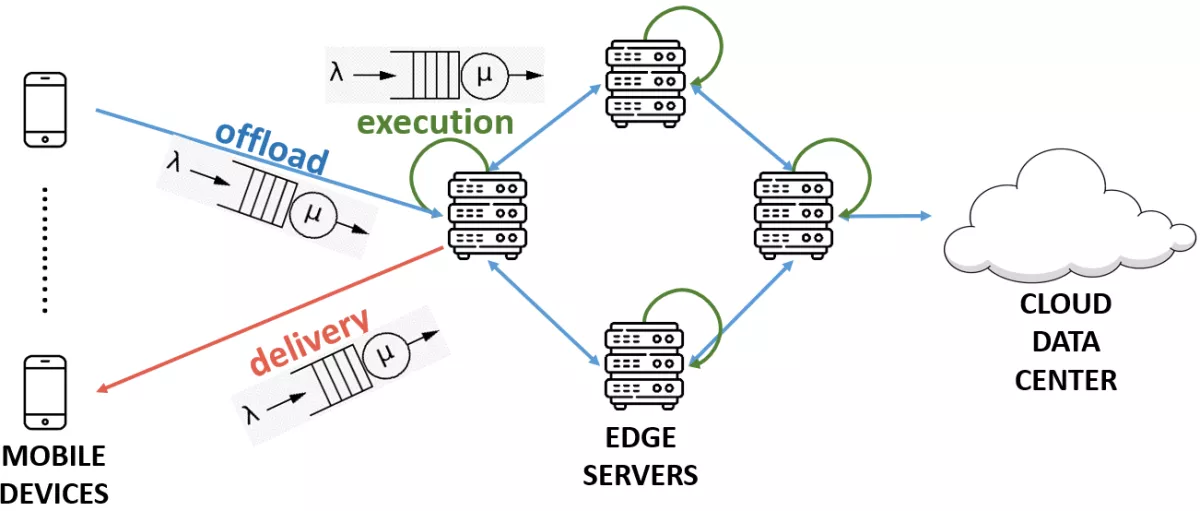

The edge offloading queueing system consisting of multiple queues is illustrated in the following figure:

The edge offloading queuing system consists of the following parts: (i) task offloading queue simulates task offloading process where mobile devices concurrently and simultaneously offload tasks to servers through a shared communication channel, (ii) task execution queue simulates task execution process where servers share their resources between multiple tasks from different devices, and finally (iii) task delivery queue simulates the task delivery process where task results are delivered back to mobile devices through the shared communication channel. The queuing latency of all three aforementioned processes is combined to form a total application latency which should be below the pre-defined time threshold.

Offloading and delivery communication queues are realized as M/M/1 queues. The M/M/1 queue is a special queue type where the first and second "M" letters stand for "memoryless" indicating stochastic arrival and service processes respectively. The number "1" indicates only a single server system capacity. In our work, each mobile device and server pair will be treated as independent queues. The M/M/1 queue is a feasible model to emulate data transmission while considering different bandwidths in both offloading and delivery directions. On the other hand, the task execution is modeled as an interconnected queueing network where each edge and cloud server is a distinct M/M/1 queue. In the queueing network, tasks can be moved from one queue to another queue. It captures the dependency between different tasks from the same application that are offloaded and executed on different server locations.

For all queues, we employ a non-preemptive first-come-first-served queueing task scheduling policy. The policy ensures fair resource-sharing and task completion predictability without disruption. The reason for this is to make a more accurate estimation of task response time. We assume that the edge offloading queue system is stable where resource constraints are respected. Also, offloading and delivery communication channels are transmitted on different frequencies to avoid interference.

Future Steps

We will implement a simulator that realistically simulates edge offloading behavior based on the presented queueing system and expand it with additional features relevant to edge offloading. The benefits of using a simulator are (i) evaluating the proposed offloading solution before real-world deployment, (ii) stressing it under different workloads that are hard to reproduce in the real world, and (iii) verifying the offloading solution against scalable infrastructure size and workload volume which is hard to perform due to lack of available equipment.